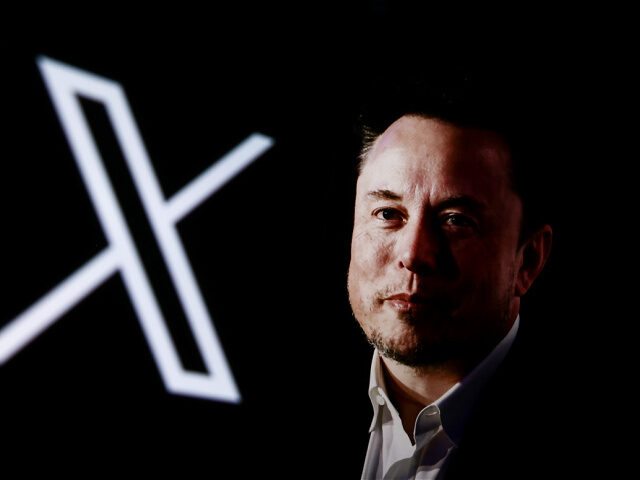

Ashley St. Clair, mother of one of Elon Musk’s sons, has filed a lawsuit against social media giant X. She alleges the platform hosts explicit AI-generated deepfakes involving her likeness and, more troublingly, children. The lawsuit draws attention to mounting anxiety over how quickly technology can create harmful content.

Ashley St. Clair, Mother of Elon Musk’s Child, Sues X over AI Sexfakes

Key Takeaways:

- Ashley St. Clair, affiliated with Elon Musk, has sued X.

- The lawsuit alleges explicit AI deepfakes featuring St. Clair and children.

- The case highlights growing concern over AI’s potential misuse in sexual content.

- The legal action reflects broader debates on platform responsibility.

- This development underscores the intersection of technology and law.

Background on the Lawsuit

Ashley St. Clair, a public figure connected to Elon Musk through their child, has initiated legal action against the social media platform X. According to details in the original news feed, her complaint centers on the widespread presence of AI-generated images that depict sexual situations involving her and, disturbingly, children.

Allegations of AI-Generated Content

In her lawsuit, St. Clair asserts that X has not taken adequate measures to remove or control the circulation of these explicit deepfakes. The technology behind such manipulations allows users to fabricate convincing yet entirely false images that can be easily shared online. This legal complaint calls attention to the formidable challenge of regulating rapidly advancing technology.

Growing Concern Over Deepfakes

Deepfake technology, which uses artificial intelligence to merge and superimpose images, has encountered increased scrutiny for its capabilities. Experts caution that when used maliciously, deepfakes can blur the line between reality and fabrication, making it harder for victims to protect their reputations or for law enforcement to moderate harmful content.

Legal and Ethical Implications

Although the outcome of St. Clair’s lawsuit remains to be seen, it raises pressing questions about online platforms’ accountability in policing fabricated and exploitative material. With children allegedly targeted by these deepfakes, the stakes of this legal battle extend beyond personal harm, prompting a broader conversation on digital ethics, privacy, and corporate responsibility in the face of evolving AI technology.