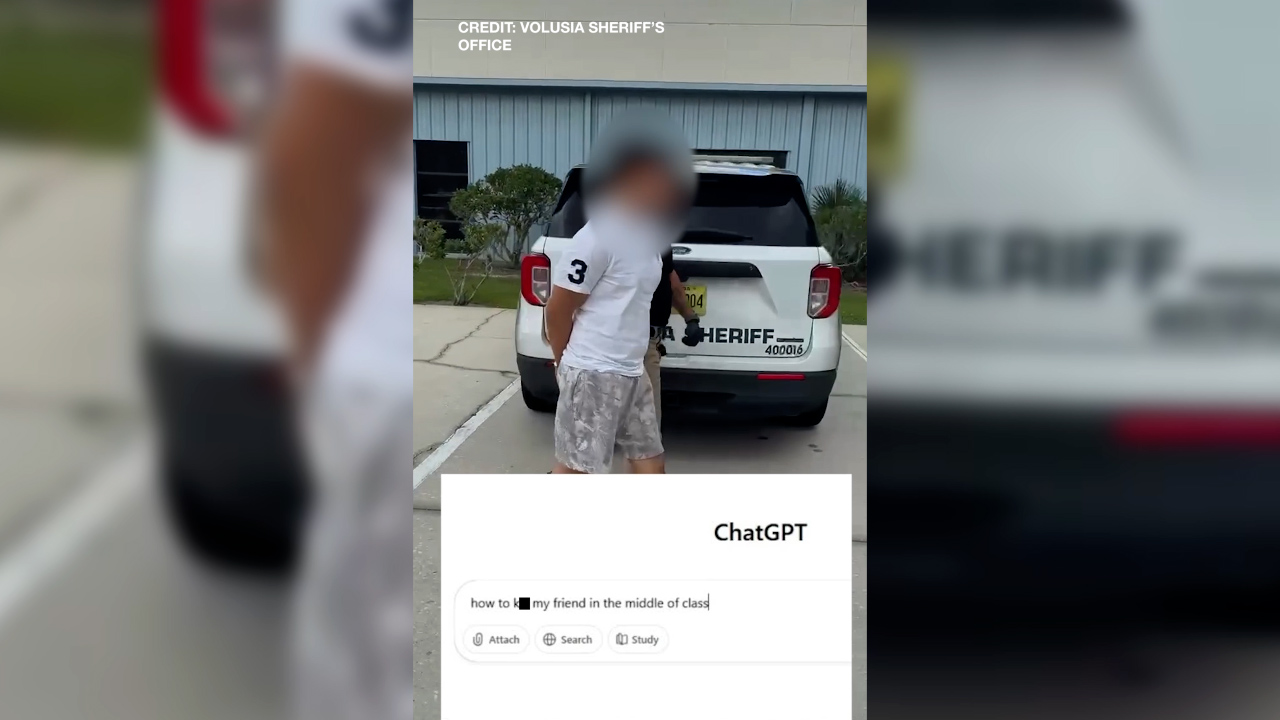

A 13-year-old student in Florida found himself in legal trouble after asking ChatGPT for advice on how to kill his friend. Authorities discovered this troubling request, prompting a swift arrest by the Volusia Sheriff’s Office. The incident underscores the risks of misusing artificial intelligence.

Florida student asks ChatGPT how to kill his friend, ends up in jail: deputies

Key Takeaways:

- A 13-year-old boy was arrested

- The incident happened in Florida

- ChatGPT was used to pose a violent question

- The Volusia Sheriff’s Office intervened

- The story was published by Wfla on 2025-10-01

The Arrest

A 13-year-old boy in Florida was detained following a disturbing inquiry he posed to ChatGPT. According to the Volusia Sheriff’s Office, the boy specifically asked the AI service how to kill his friend, a query they deemed violent enough to warrant immediate action.

AI at the Center

The situation unfolded when the boy’s question on ChatGPT caught attention due to its explicit nature. While the full contents of the violent request remain undisclosed, deputies cited the direct threat it posed as the primary cause for concern.

Law Enforcement Response

Responding swiftly, the Volusia Sheriff’s Office took the boy into custody. Officials stated that public safety was their top priority, and using an AI service for a threatening inquiry signaled a significant red flag.

Broader Implications

This case highlights growing concerns over the misuse of accessible artificial intelligence tools. It raises questions about how law enforcement, schools, and communities can address dangerous online behavior, especially among younger users.